Hello,

Today we will build a mini integration proof of concept (poc) which is to transfer data from Salesforce to AWS S3 using AWS AppFlow.

Overview of AppFlow service:

Amazon AppFlow is a fully managed integration service that enables us to securely transfer data between Software-as-a-Service (SaaS) applications like Salesforce, SAP, Zendesk, Slack, and ServiceNow, and AWS services like Amazon S3 and Amazon Redshift without much coding.

Note AWS AppFlow cost charge according to pricing here.

Prerequisites:

- Empty S3 bucket named "salesforcetos3test"

- Salesforce Developer account

- Login to the Salesforce developer account

Steps:

Login to AWS console and navigate to AppFlow Service

Click "Create Flow" button

- Enter the flow name and optional description. Click next button.

- Under source select "Salesforce". Click Connect

- Select "Production" as the option for Salesforce environment. Provide a connection name and click continue

- Login (if not already) to Salesforce developer account. Also click "Allow" when prompted to allow access

- Select "Salesforce objects" and then select Account since we want to import all data of Account object from Salesforce to AWS

- Under destination select "Amazon S3"

- Select the S3 bucket which was created as prerequisites

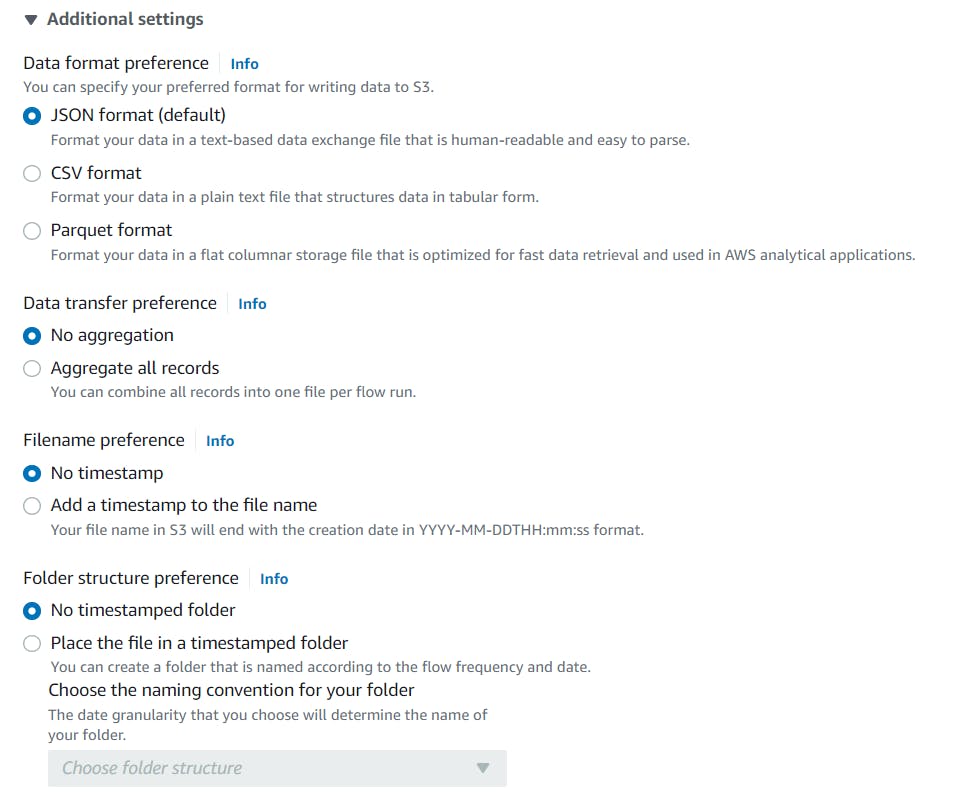

- We will take the additional settings as defaults. We have additional options of editing data preference and some more as in below image

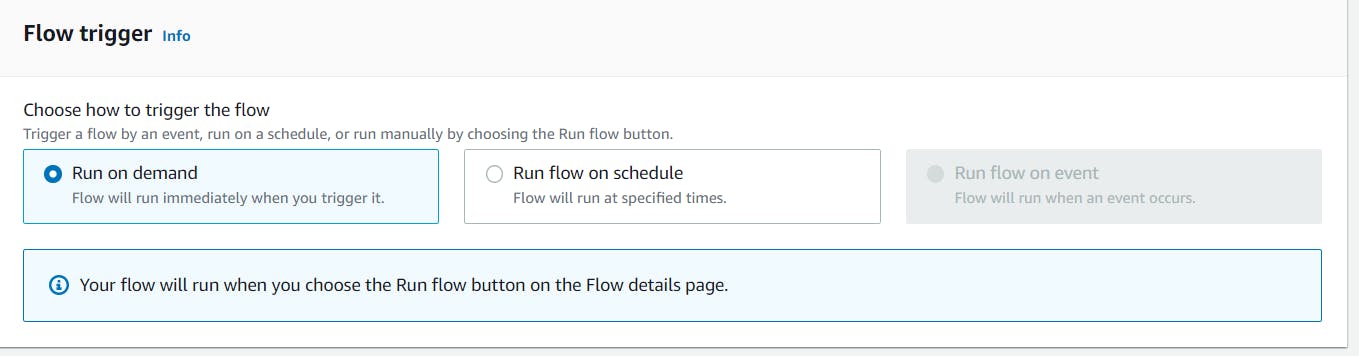

- Select when the flow should trigger. For this poc we select Run on demand. We also have option to run on schedule in case we have a requirement to get data from Salesforce at regular intervals

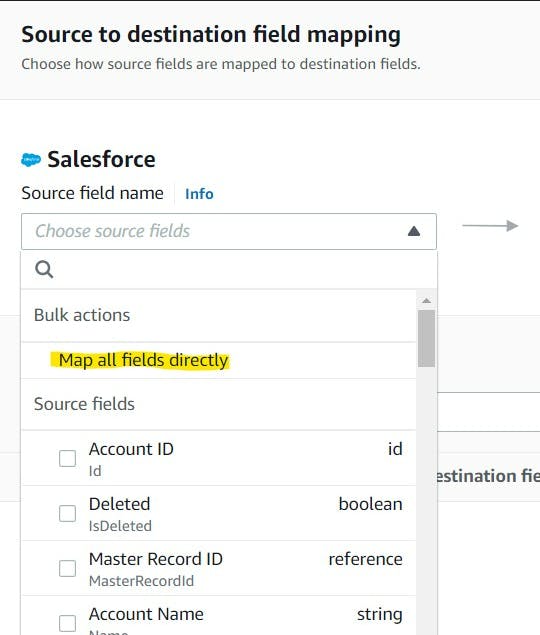

- Select Manually map field as mapping method.

- Under Source to destination field mapping click on choose source field and select "Map all fields directly"

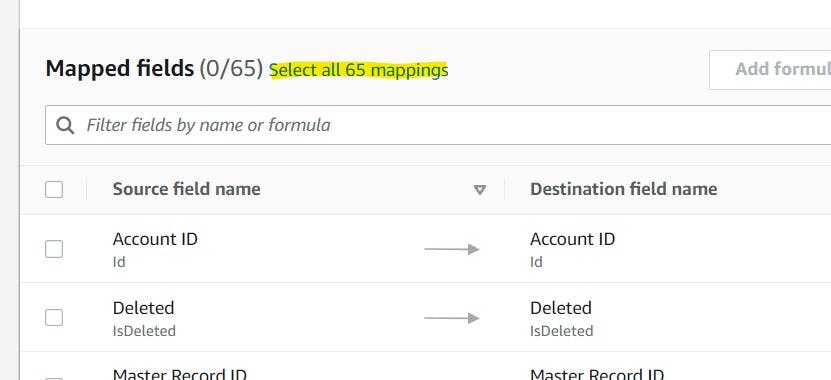

- Under Mapped fields click on "Select all 65 mappings"

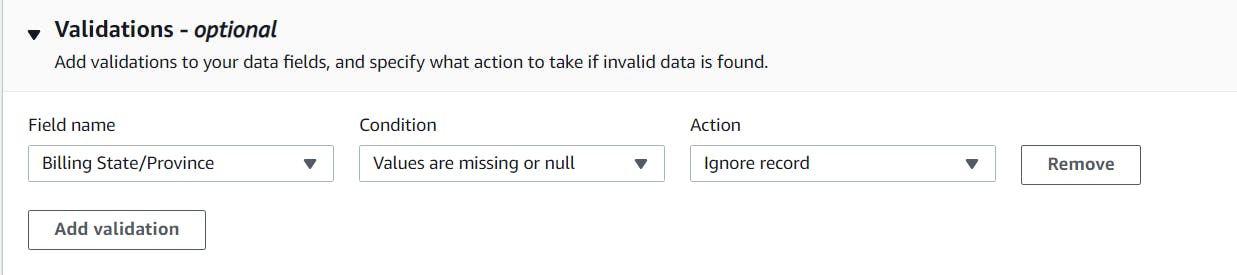

- We can optionally add validations to filter out invalid data. For this poc we will be filtering out the acount record in salesforce which does not have Billing State/Province

- Click next. We can add additional filters if needed. For now we will not add any filters and click next

- On Next screen we will review the flow and click create flow

- Once the flow is setup we will have the run flow button enabled. Click on run flow button to trigger the flow

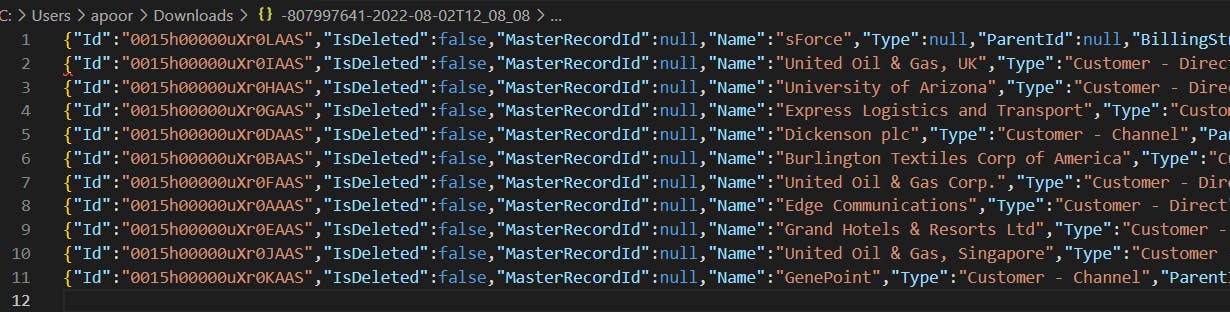

- Once the flow is completed we will get a sucess message. Navigate to S3 bucket to find the file. Download to view the file.

For this poc we had 13 records for Accounts object in Salesforce with 2 records having blank Billing State/Province field and the json file (created post flow execution) in S3 bucket is having 11 records as can be seen below. The validation rule we put earlier excluded the 2 records successfully.

Also if we check the S3 bucket policy we could see some updates done internally by AppFlow to facilitate the data import from external SaaS platform.

With that we have completed a quick integration. Thanks for reading 😃

References: